Data Structures and Algorithms

In computer science, Data Structures are essential concepts for software engineers to master. For those who are not familiar with the term, a data structure is a data organization, management, and storage format that enables efficient access and modification. More precisely, a data structure is a collection of data values, the relationships among them, and the functions or operations that can be applied to the data. They act like the signs above aisles in a grocery store telling a shopper what items are in the isle, in the sense that it provides the framework and organization necessary for a programmer to call and retrieve any desired information or data. Therefore, for any programmer working with any kind of data (and in some cases when specific information isn’t even used), data structures will need to be used in order to create a working application. There are many types of data structures, as well; which one should be used just depends on what the programmer is trying to accomplish.

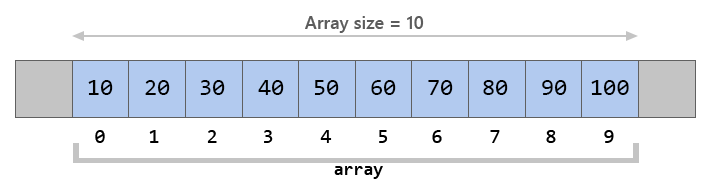

One of the most common and important data structures is the array. An array is a number of elements in a specific order, typically all of the same type (depending on the language, individual elements may either all be forced to be the same type, or may be of almost any type). The elements in an array are accessed using an integer index to specify which element is required. Typical implementations allocate contiguous memory words for the elements of arrays, but this is not always a necessity if the language does not necessitate it. Another important feature of arrays is that they may be fixed-length or resizable, and this can usually be done easily. Therefore, when a programmer needs to call elements very quickly, or needs a list that can be added to or modified with ease, they will typically use arrays (but there are other, more complex situations where an array can be beneficial).

Another important type of data structure are lists (or linked lists). Like the name suggests, a list is a linear collection of data elements of any type, called nodes, where each node has itself a value, and points to the next node in the linked list. The main benefit of a list over an array is that values can always be efficiently inserted and removed without relocating the rest of the list. Certain other operations, such as random access to a certain element, are however slower on lists than on arrays, since there are no index or itemized values to call from. Again, whether or not to use a list is left up to the programmer’s discretion.

Yet another structure are trees. As we’ve seen, most concepts in programming are named fairly unimaginatively, and like the name tree implies, it is a is a widely used abstract data type that simulates a hierarchical tree structure, with a root value and subtrees of children with a parent node, represented as a set of linked nodes. Trees can be ordered or unordered, but the necessary characteristic is that each node has to have a parent and children, and can be recursively defined. In a sense, they can be conceptualized as lists that, instead of each element only pointing to one other element, they might point to two, three, or many more.

Moving on, another useful type of data structures is a hashtable. A hashtable is a data structure that implements an associative array abstract data type, a structure that can map keys to values. A hash table uses a hash function to compute an index, also called a hash code, into an array of buckets or slots, from which the desired value can be found. During lookup, the key is hashed and the resulting hash indicates where the corresponding value is stored. Typically, hashtables are used when there is a lot of data, to the point where searching should be optimized, since it is easier to go to the buckets or slots and then find an element than iterate over the entire structure, and the cost of finding an element is low. This efficiency is the main benefit of hashtables, and are mostly used for this characteristic.

Stacks are another common structure. This structure is also know as LIFO (Last in, First out), due to the way that elements come off the stack. There are two main functions in the stack form of data organization: Push, which adds an element to the collection (thinking of it visually, just puts something on top of the stack), and Pop, which removes the most recently added item (taking whatever’s on top of the stack off of it). A stack may be implemented to have a bounded capacity, which, if the stack is full and does not contain enough space to accept an entity to be pushed, is then considered to be in an overflow state (hence the all-too-familiar name among software engineers: StackOverflow).

Queues are similar to stacks, but with one important difference. While stacks are LIFO, queues are FIFO (First in, First out). Queues have two functions as well, with enqueue meaning to add something to the queue at one end, and dequeue meaning to take something out of the queue at the other end. This means that whatever is added first will consequently be taken out first, but also that if you put something in, you will have to dequeue everything else in the queue in order to access it. Queues are typically used as a buffer, for when information is stored and needs to be processed later, and also is important for breadth-first searches.

A heap, which is the last data structure I’m going to discuss in this post, combines principles from trees and queues. A heap is a specialized tree-based data structure which is essentially an almost complete tree that satisfies the heap property: in a max heap, for any given node, its parent will have a value that is greater than or equal to the key of that node. In a min heap, conversely, the value of the parent will be less than or equal to the value of the child. Its relation to a queue is more so in priority than in function. The lowest priority will be stored at the root (if it’s a min heap), and then will build up. This characteristic is extremely helpful when it is necessary to remove the element with the most or the least priority.

Data structures are important tangible terms to know, but the algorithm of an application is what truly makes it work. Specifically, an algorithm is a finite sequence of well-defined, computer-implementable instructions, typically to solve a class of problems or to perform a computation. Algorithms are always unambiguous and are used as specifications for performing calculations, data processing, automated reasoning, and other tasks. Without going into too much detail on the importance of algorithms, I will describe three important concepts and just stress that without an efficient and well-thought out algorithm, the program will not be as effective as possible.

Some important algorithms to know are Sorting algorithms. Surprise: sorting algorithms consist in the methods and strategies of putting elements in order in a list (frequently it’s either in a numerical or lexicographical order). However, the fundamentals of a sorting algorithm are not that it can do the assigned task; that is fairly simple. The most important part is the efficiency of the algorithm- how can it do the task in a manner that is most efficient and requires the least amount of computational power? There are many sorting algorithms, each one useful in different situations. Because I could write an entire blog post on the various sorting algorithms and how they function, instead I will provide a list of some popular ones and hyperlink them to other articles for more information. Some of these algorithms are Quicksort, Bubble sort, Insertion sort, Selection sort, and Heap sort. There are many more algorithms that have been developed, but these are just a few that will give the best idea of what exactly a sorting algorithm is and how one can be more efficient than another (below is a list of various sorting algorithms and their efficiencies).

Another important type of algorithm for developers to be familiar with are Search algorithms. Search algorithms are the way in which a developer retrieves information from some data structure. Clearly, when working with data, search algorithms will be used a lot (and often languages will have built-in functionality that can do this automatically), but when working with extremely large sets of data a simple linear, iterative search won’t be reasonable. Therefore, search algorithms are typically evaluated on how fast they can find a solution, and whether that solution is guaranteed to be optimal. While efficiency is of course the number one priority, and is the key difference between most of the search algorithms I will give below, it is also important to have good filters and ranking systems to ensure that the results of the search algorithms are the best possible. Similar to the sorting algorithms, I could also dive a lot deeper into search algorithms, but I’ll provide links for more information to avoid clouding the theme of this post. Some common searching algorithms include sequential search, binary search, breadth-first search, depth-first search, and a* search. Again, each of these are efficient in different situations, and should be used contextually.

There are many other data structures and algorithms in the computer science realm, but these are just some of the important ones to know and have a grasp of. As a software engineer learns computer science, these concepts will inevitably be pounded into his head, in school and in their career, so having at least a basic understanding of them is helpful. Just like most of computer science, there are many options to choose from, and the choice is at the programmer’s discretion, just as long as they consider efficiency and a few other variables, and how they will fit into the application.